Addressing Out-of-Distribution Challenges in Image Semantic Communication Systems with Multi-modal Large Language Models

Overview

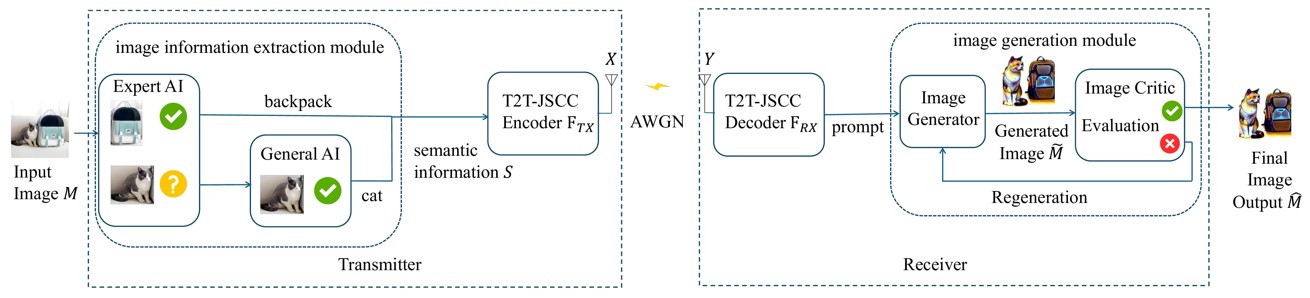

We find that the prevalent out-of-distribution (OOD) problem, where pre-trained machine learning (ML) models are applied to unseen tasks outside the distribution of training data, may compromise the integrity of semantic compression in semantic communication schemes. We propose a novel image semantic communication system that utilizes multimodal large language models (MLLMs) to address the OOD issue in image semantic transmission. At the transmitter, it can combine the advantages of different models for more reliable semantic compression. The receiver utilizes the cooperation of multiple MLLMs to enhance the reliability of image reconstruction. In particular, our contributions consist of:

i. We propose a novel “Plan A- Plan B” framework that leverages the broad knowledge and strong generalization ability of an MLLM to assist a conventional ML model when the latter encounters an OOD input in the semantic encoding process.

ii. We propose a Bayesian optimization scheme that reshapes the probability distribution of the MLLM’s inference process based on the contextual information of the image. The optimization scheme significantly enhances the MLLM’s performance in semantic compression by:

- Filtering out irrelevant vocabulary in the original MLLM output;

- Using contextual similarities between prospective answers of the MLLM and the background information as prior knowledge to modify the MLLM’s probability distribution during inference.

iii. At the receiver side of the communication system, we put forth a “generate-criticize” framework that utilizes a MLLM to judge the accuracy of the image reconstructed by the text-to-image model. When the recovered image is incorrect, the MLLM will generate new prompts to further guide more correct image reconstruction and continuously iterate the process.