The Power of Large Language Models for Wireless Communication System Development

Overview

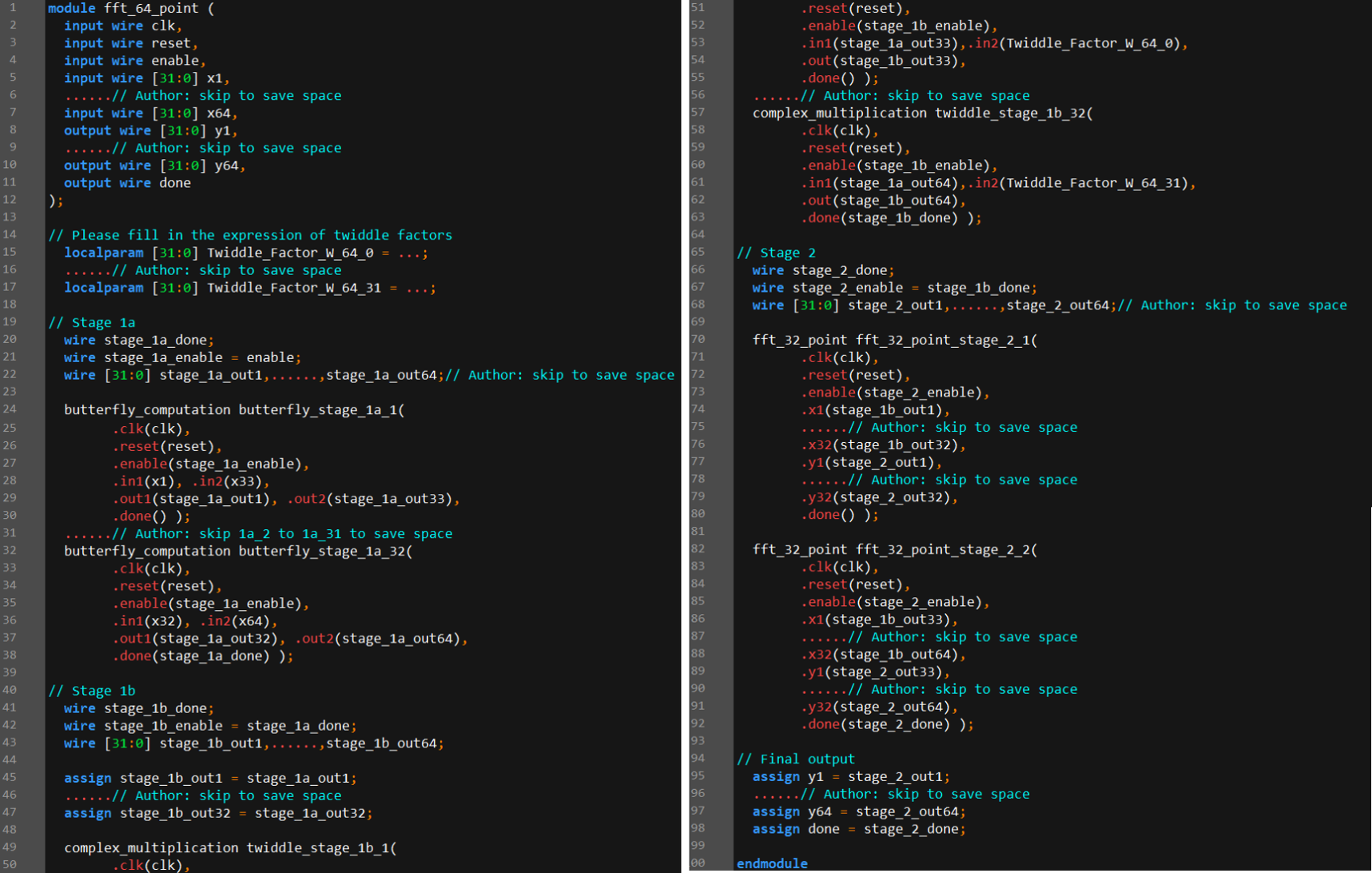

Large language models (LLMs) have garnered significant attention across various research disciplines, including the wireless communication community. There have been several heated discussions on the intersection of LLMs and wireless networking technologies. While recent studies have demonstrated the ability of LLMs to generate hardware description language (HDL) code for simple computation tasks, developing wireless networking prototypes/products via HDL poses far greater challenges because of the more complex computation tasks involved. We aim to address this challenge by investigating the role of LLMs in FPGA-based hardware development for advanced signal-processing algorithms in wireless communication and networking. We begin by exploring LLM-assisted code refactoring, reuse, and validation, using an open-source software-defined radio (SDR) project as a case study. Through the case study, we find that an LLM assistant can potentially yield substantial productivity gains for researchers and developers. We then examine the feasibility of using LLMs to generate HDL code for advanced wireless signal processing, using the Fast Fourier Transform (FFT) algorithm as an example. This task presents two unique challenges: the scheduling of subtasks within the overall task and the multi-step thinking required to solve certain arithmetic problem within the task. To address these challenges, we developed two novel techniques: iterative in-context learning (IICL) and length-limit chain-of-thought (LL-CoT), which culminated in the successful generation of a 64-point Verilog FFT module. Our results demonstrate the potential of LLMs for generalization and imitation, affirming their usefulness in writing HDL code for wireless networking systems. Overall, this work contributes to understanding the role of LLMs in wireless networks and motivates further exploration of their capabilities.

Details

This paper delves into the intersection of Large Language Models (LLMs) and wireless communication technologies, yielding inspiring results in utilizing LLMs to prototype wireless systems. Our research highlights the potential of LLMs in facilitating complex FPGA development within wireless systems.

We begin by demonstrating how an LLM can serve as a crucial assistant for FPGA development, providing examples in code refactoring, code reuse, and system validation. Moreover, our paper presents a pioneering exploration of utilizing LLMs to expedite the development of complex signal-processing algorithms for FPGA-empowered SDR systems, using the well-known FFT algorithm as an example.

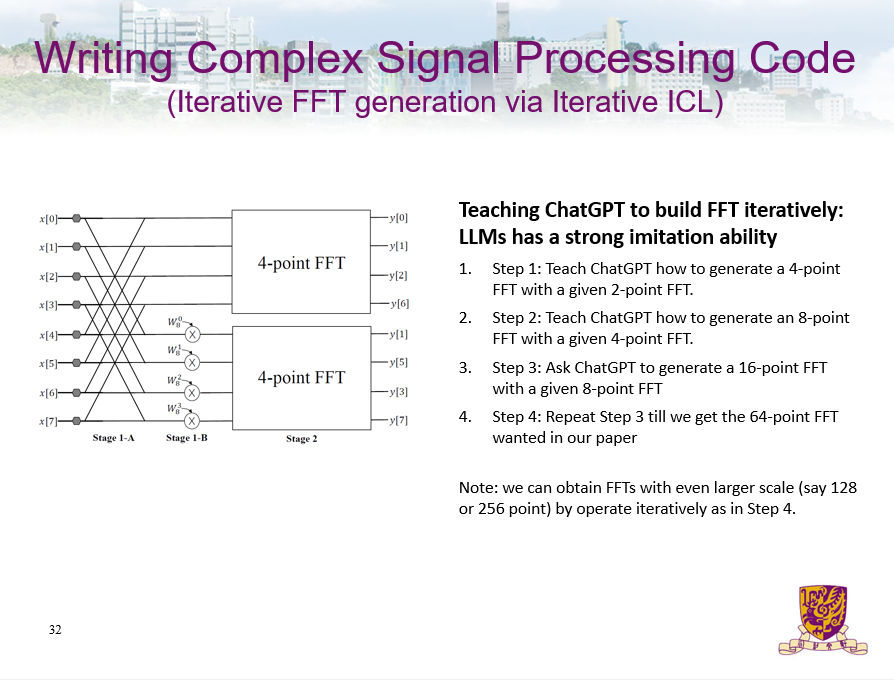

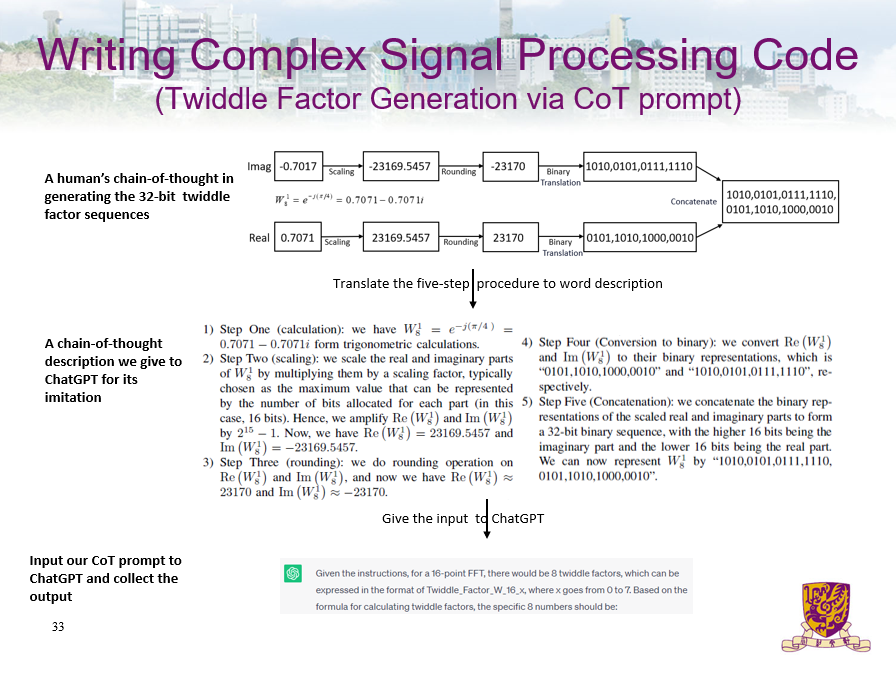

We tackled two primary challenges encountered in the implementation of FFT in the Verilog language: 1) complex mathematical calculations and 2) the efficient scheduling of sub-module executions. The length-limit chain-of-thought (LL-CoT) prompting technique we used for the first problem significantly enhanced the LLM's capacity for multi-step mathematical reasoning, enabling it to handle the intricate task of twiddle factor generation. Meanwhile, the iterative in-context learning (IICL) approach we developed for the second problem effectively taught the model to schedule the execution of sub-modules by conceptualizing the scheduling task iteratively. The successful deployment of these techniques not only underscores the advanced capabilities of current LLMs in handling complex algorithmic generation tasks on SDR platforms, but also illustrates a substantial leap toward their practical applications in real-world engineering problems, particularly in the telecommunications domain.

Additionally, we conducted benchmark experiments involving a wide pool of participants to quantify the productivity gains provided by LLMs in wireless network prototyping. We constructed a set of HDL programming tasks representative of typical challenges. These tasks were distributed to two groups of volunteers: one composed of undergraduates with related coursework experience, and another consisting of senior postgraduate students from an ASIC&FPGA related lab. For comparison, two equivalently diverse groups (one undergraduate and one postgraduate) were assigned the same tasks, but they could finish these tasks with LLM's assistance. We evaluate each group based on code quality and completion time. The results indicate that the aid of LLMs notably reduces coding time even for experienced engineers and enhances their code quality.

For more information

For more details, please see our paper.